In the world of software development, many programmers regard multithreading as a magic bullet for performance issues. However, this assumption is often misguided as highlighted by multiple developers’ experiences. As some seasoned engineers have noted, it’s almost a rite of passage to think that ‘more threads = faster execution,’ only to discover that their application is considerably slower. The dream of linear performance improvements through additional threads quickly turns into a nightmare of debugging and performance profiling.

One of the underlying issues is the misconception about where to optimize. Donald Knuth’s assertion that ‘premature optimization is the root of all evil’ remains incredibly relevant today. However, it is crucial to understand that Knuth wasn’t advocating for ignoring optimization entirely; he stressed the importance of targeting critical parts of the code. For instance, contemporary software often does not possess the straightforward inner loops of older scientific programs. Today’s interactive applications feature complex event loops and workflows that don’t neatly fit into the old paradigms.

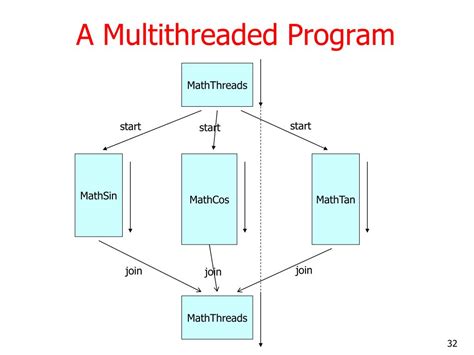

Concurrency introduces a labyrinth of potential issues like deadlocks, race conditions, and resource contention. Libraries and frameworks like the standard C library (libc) were designed in an era that didn’t foresee the extensive use of parallel execution. These older designs often assume a single-threaded environment, creating hidden pitfalls for the modern developer. For example,

random() uses global state, which leads to significant lock contention when accessed by multiple threads, degrading performance.

It is worth noting that improvements in Inter-Process Communication (IPC) and asynchronous operation models have changed how we think about multithreading. In many cases, using processes instead of threads can simplify development and debugging, though at the cost of performance overhead. This strategy avoids issues related to shared memory but introduces new challenges with data serialization and inter-process communication latency.

Certain modern languages and frameworks attempt to abstract away the complexities of concurrent execution. For example, Go 1.20 provides an optimization where calls to

rand.Seed switch between a globally seeded RNG and a thread-local RNG, eliminating the need to manually manage random number generator states for different threads. Despite these advancements, the majority of high-performance applications, such as game engines, require careful hardware grooming and specific optimizations, going beyond what is generally offered by conventional Object-Oriented Programming (OOP) models.

The conversation often extends to educational paradigms and tooling for new engineers. Many programmers first encounter these issues in academic courses on Parallel Computing, where the practical understanding of profiling, debugging, and intelligently using thread-safe libraries often takes a back seat to theoretical knowledge. The challenge is compounded by the inadequacies of existing abstractions that fail to shield programmers from underlying complexity while introducing performance bottlenecks of their own, like unnecessary mutexes and locking mechanisms.

In conclusion, while concurrency and multithreading can offer substantial performance benefits, they are not a guaranteed path to better performance. Engineers must be judicious in their use of these tools, carefully profiling and understanding the implications of their design choices. Learning from pre-existing libraries, and understanding both the legacy and modern approaches to problem-solving, can help in navigating the intricacies of effective multithreading. For those willing to delve into the nitty-gritty, languages like Rust offer strong guarantees against common concurrency issues, although they come with their own learning curves and complexities. Regardless of the tools chosen, the principle remains clear: optimize wisely, and understand the broader impact of your architectural decisions.

Leave a Reply