The advent of large language models (LLMs) has brought transformative possibilities to many industries. One such potential application is in the realm of financial statement analysis. These models, particularly popularized by GPT-4, are reshaping the traditional ways of extracting insights from complex financial data. Financial statement analysis has traditionally involved methodical scrutiny by experts who manually evaluate various metrics to derive meaningful insights. However, with LLMs, the expectation is to automate and refine this process, making it quicker and potentially more accurate. Although the implications appear promising on the surface, the scenario is multifaceted with both optimism and skepticism coming from various stakeholders in the finance sector.

Through the years, the process of analyzing financial statements has seen evolution, from basic human interpretation to more sophisticated computational models. As one commenter highlighted, there is skepticism about the idea that LLMs can revolutionize this field. The analogy of tricking multiple LLMs resonates with the difficulty of creating a file that hashes to the same value across diverse hashing techniques— theoretically possible but practically difficult. This perspective is significant. While LLMs can analyze patterns and general sentiments, the finesse of human intuition in recognizing nuanced and context-specific indicators is hard to replicate. Accordingly, there remains a notable gap between what these models can do and the expertise developed through years of human experience.

Despite concerns, there are examples where algorithms have successfully delivered significant results. For instance, sentiment analysis on financial statements isn’t a novel concept but dates back to the 1980s. The evolution of this technique, as noted by another commenter, has gone from counting positive and negative words to more complex sentiment models. LLMs enhance this by synthesizing extensive textual and tabular data in a way that offers deeper insights than traditional models.import pandas as pddf = pd.read_csv('financial_statements.csv')df['Sentiment'] = df['text'].apply(lambda text: sentiment_analysis(text)). However, critics argue that LLMs are not a magical, all-solving tool. As evident from practical use cases, executives can and do craft their statements in ways that may game these sentiment models, resulting in overly positive financial outlooks that aren’t entirely reflective of the underlying realities.

The role of LLMs in financial analysis extends to deeper applications. For instance, linking a dataset of all relevant financial statements of a company or its competitors can provide comprehensive comparative analyses. This more extensive contextual understanding, which brings together myriad financial elements, can potentially create more holistic financial portraits. However, some pointed out the limitations of current context windows in LLMs, questioning if the models have the capacity to handle such extensive datasets efficiently. As noted by one expert, a larger context window might help but is still not a panacea.

Furthermore, the question of what exactly these LLMs are achieving compared to traditional machine learning models used in quantitative finance remains. As another commenter rightly pointed out, a three-layer neural network from 1989 still holds competitive accuracy levels with a modern LLM, underscoring the fact that more complexity doesn’t always equate to better outcomes. The benchmarks from historical models like those from Ou and Penman (1989) still display robust performance metrics. This raises questions about whether LLMs offer a sufficiently unique advantage to justify their complexity and computational demands.

Thus, while the ideal of having a versatile, generalist model capable of producing detailed financial insights is appealing, it is not without its caveats. Critics argue that it isn’t wise to expect LLMs to outperform specialists in every situation. Realistically, financial markets are intricate and multi-faceted, and no single model can capture all dimensions effectively. Moreover, the potential for misuse and the risk of falling into Goodhart’s law—where a measure becomes a target and ceases to be a good measure—is high. This warrants a balanced approach, blending the strengths of LLMs with human expertise to create the most effective analytical frameworks.

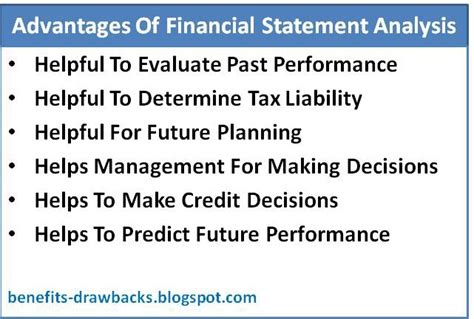

In conclusion, the integration of LLMs in financial statement analysis presents both opportunities and challenges. On the one hand, their ability to rapidly process and analyze large volumes of data can lead to new insights and efficiencies. On the other, the limitations in current modeling techniques and the potential for statement gaming by corporate executives could undermine the reliability of these systems. The ongoing evolution in AI and machine learning technologies makes it clear that a combined approach that leverages both human insight and cutting-edge technology will likely be the most fruitful path forward in the world of financial analytics.

Leave a Reply