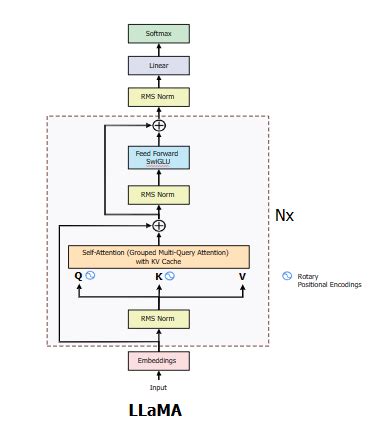

A recent GitHub repository showcasing the implementation of Llama3 from scratch has sparked a wave of intrigue and curiosity among the AI community. As enthusiasts eagerly dive into the code and documentation, discussions have emerged on the evolution of neural network (NN) architectures and the ongoing debate surrounding transformer models. One of the key takeaways from user comments is the emphasis on the standardization of NN structures, particularly transformer models, as a significant milestone in AI research.

Commentators have highlighted the prevalence of transformer models across various domains, from language processing to image recognition and robotics. The versatility of transformers in handling diverse data types and tasks has solidified their position as a cornerstone of modern AI applications. However, concerns have been raised about the over-reliance on transformers, with some experts cautioning against blindly applying them to all scenarios without careful consideration.

The discourse also delves into the optimization challenges faced by researchers when balancing model complexity and computational efficiency. While uniform structures like transformers facilitate training and parallelization, there is a growing demand for innovative approaches to enhance memory and power efficiency in AI systems. Emerging models like MoE (Mixture of Experts) offer a glimpse into potential advancements beyond the traditional transformer paradigm.

Furthermore, the comments shed light on the iterative nature of AI advancements, with each new model iteration building upon previous successes and shortcomings. The delicate balance between exploring new architectures and optimizing existing ones poses a strategic challenge for researchers and developers in the field. The discussion extends to the trade-offs between dynamic and static model architectures, highlighting the ongoing quest for optimal performance and scalability.

Amidst debates on model architectures and optimization strategies, ethical considerations surrounding bias and censorship in AI systems have also surfaced. Commentators have expressed divergent views on the potential societal impacts of AI models reflecting cultural biases and value systems. The conversation around the intersection of AI technology, ethics, and user experience reflects a broader discourse within the AI community on responsible AI development.

In conclusion, the exploration of user comments on the Llama3 implementation offers a multifaceted view of the evolving landscape of neural network architectures. From the dominance of transformer models to the quest for efficient and scalable AI systems, the discourse encapsulates the dynamic nature of AI research and development. As researchers continue to push the boundaries of AI innovation, the insights gleaned from community discussions provide valuable perspectives on the future trajectory of neural networks and machine learning.

Leave a Reply