The recent discussion around the concept of ‘zero-shot’ generalization in AI models has sparked significant interest and debate within the technology community. The analysis of image-to-text and text-to-image models, such as CLIP and Stable Diffusion, sheds light on the inherent challenges of achieving true generalization without substantial amounts of training data. The research highlights the critical role of data scale in improving model performance, revealing a fundamental relationship between concept frequency in pretraining data and the model’s ability to effectively learn and generalize.

One of the key takeaways from the study is the linear scalability of model performance with respect to the exponential growth in concept frequency during pretraining. This finding underscores the importance of adequate data representation across a diverse range of concepts to enable optimal model learning and generalization. The ‘Let it Wag!’ benchmark introduced by the authors serves as a valuable resource for evaluating model performance on rare and novel concepts, offering insights into the limitations of current AI models in handling long-tail data distributions.

Moreover, the discussion surrounding the potential impact of these findings on the broader field of AI research raises thought-provoking questions about the future trajectory of machine learning. The notion of an imminent ‘AI winter’ and the implications for research funding and innovation loom large in the current landscape. While incremental improvements and continued exploration of existing methodologies are expected to persist, the specter of stagnation and funding challenges poses a significant risk to sustained progress in the field.

The analysis of the CLIP model and its implications for zero-shot generalization prompts a deeper exploration of the underlying mechanisms driving model performance and the transferability of learned concepts. The nuances of human cognition and learning processes offer valuable insights into the challenges faced by AI models in handling novel and rare concepts. As the quest for AGI continues, the need for robust training data and enhanced model capabilities remains a critical focus for researchers and practitioners.

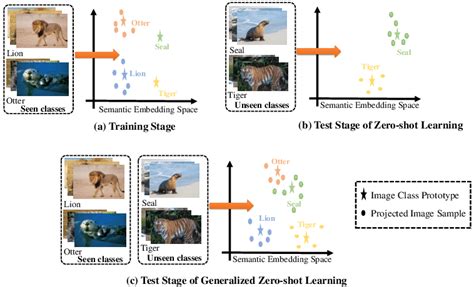

In essence, the debate surrounding zero-shot learning and the exponential data requirements for AI models underscores the complexities of achieving true generalization and robust performance across diverse tasks and concepts. The interplay between data scale, model architecture, and learning dynamics highlights the ongoing challenges and opportunities in the field of machine learning. By delving deeper into the intricacies of model training and performance evaluation, researchers can gain valuable insights into the future evolution of AI technologies and the quest for artificial general intelligence.

As the AI community grapples with the implications of exponential data challenges and the quest for zero-shot generalization, the need for continued research, innovation, and interdisciplinary collaboration becomes increasingly apparent. By addressing the limitations identified in current models and exploring novel approaches to data representation and model training, researchers can pave the way for more robust and versatile AI systems. The journey towards achieving true zero-shot learning capabilities may be fraught with obstacles, but with perseverance and a commitment to advancing the frontiers of AI technology, significant breakthroughs and advancements await in the ever-evolving realm of machine learning.

Leave a Reply